Log Processing

LogRhythm processes your organization's raw log data and presents it in a way that makes it easier to analyze and protect your network operations. For a complete list of metadata fields that LogRhythm parses, calculates, and derives from raw log data, see the LogRhythm Schema Dictionary and Guide. Note that while all metadata fields are available in the Web Console, not all are available in the Client Console.

LogRhythm components collect and process log messages as follows:

- LogRhythm Agents monitor devices in the network (servers, routers, etc.), where they collect raw log data and forward it to a Data Processor. Agents can be installed on both Windows and UNIX platforms.

- The Data Processor is the central processing engine for log messages. Its integrated Mediator Server contains the Message Processing Engine (MPE), which is responsible for the following:

- Assigning a Log Source based on where the log originated. For example, a Log could be from a Windows server, a UNIX host, a networking device, or an application.

- Identifying a Log Type (Common Event), which amounts to a short, plain-language description of the log.

- Assigning a Log Classification that includes one of three major classifications (Operations, Audit, or Security) and a specific sub-classification.

- Identifying Events, which are important logs that may be of interest or concern (typically less than 5% of your total log activity).

- Parsing and deriving metadata from the raw logs, and when applicable, adding contextual information such as the known application or geographic location.

The Data Processor forwards logs categorized as MPE events to the Platform Manager. If the Advanced Intelligence Engine (AI Engine) is integrated into the LogRhythm Enterprise deployment, the Data Processor can also send copies of log messages to the AI Engine.

The AI Engine (if integrated) evaluates logs using a pattern matching and behavioral rule set that can correlate and detect complex issues such as sophisticated intrusions, insider threats, operational issues, and compliance issues. If a log triggers one of the AIE rules, the AI Engine generates a new AIE event for the Platform Manager Database.

The Platform Manager receives event data from the Data Processors and the AI Engine. It includes an Alarming and Response Manager (ARM), which evaluates the events against rules for alarms and initiates the appropriate response. The Platform Manager also serves as the central point of access for LogRhythm Enterprise. It houses the databases for events, alerts, and metadata, and includes all the configuration information.

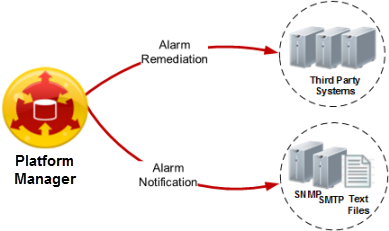

If an alarm is generated, one or both of the following actions can be triggered:

Notification. An alert is sent to administrators via SNMP traps, email (SMTP), or text files.

Remediation (SmartResponse action). An automated script responds with an action to resolve the issue.

Debug/Verbose Logging

LogRhythm Message Processing Engine (MPE) rules are designed with the intent of parsing log messages at the Information (syslog severity) level and above. By their very nature, Debug and Verbose log messages contain low-level information that would not normally appear (using default logging configurations) within typical operational, audit, or security log messages. Given this, the lack of parsing/normalization of log messages at the Debug (or Verbose) logging levels is intentional and for several reasons, some of which are conceptual and others more mechanical.

Most devices will generate an order of magnitude greater log volume when set to the Verbose or Debug logging levels. The result of this is system impacting on multiple fronts with the most critical being processing via the MPE and indexing via the Data Indexer (DX). Specifically, while Verbose and Debug logs will inevitably hit Catch All rules, they will not only cause an immense MPE slow down, but also many stalled/disabled rules, spooling, and overall poor performance as they attempt to process through every rule in the policy. In addition to this, indexing will be impacted, whereas the logs cause a backup of spooled reliable persist files, resulting in poor DX performance.

Again, while Debug and Verbose logging may be beneficial in development and system troubleshooting scenarios, heavy volume log sources and/or enabling such logs for longer periods of time could potentially result in a drastic impact on overall system performance, so normalization of these log messages does not occur as part of the LogRhythm MPE feature.

Log Processing Basics

The devices in your network are generating thousands of raw logs at every moment. These logs are generated in a wide variety of formats, and they represent varying degrees of importance in maintaining and protecting your network. LogRhythm processes raw log data to make information available to you in a meaningful and uniform context.

Understanding how LogRhythm processes the raw log data helps you configure your LogRhythm deployment to get the fullest benefit and most useful output. LogRhythm log processing includes:

- Initial Processing

- Assign a Log Source

- Identify a Common Event

- Assign a Classification

- Identify Events

- Metadata Processing

- Parsed data

- Calculated

- Derived data

In the following examples, we will use this raw log:

08 13 2009 15:21:19 1.1.2.1 <LOC4:ERRR> Aug 13 2009 15:21:19 GSC-Internet-FW: %ASA-3-106014:

Deny inbound icmp src INSIDE:4.2.1.3 dst INSIDE:4.1.1.1 (type 0, code 0)

After the example log is reviewed, examples are provided for other common types of logs.

Initial Processing

Assign a Log Source

Log Sources are unique log originators on a specific Host. A Host can have more than one Log Source. For example, a typical Windows Server Host would include System Event Logs, Application Event Logs, and Security Event Logs.

LogRhythm identifies the Log Source to:

- Determine where the log originated.

- Assign the Log Source to the correct set of base rules called a Message Processing Engine (MPE) policy.

- Process the log against the assigned MPE policy. Processing against an MPE policy instead of the complete set of rules is a tremendous gain in efficiency. The information in the example log allows LogRhythm to identify it as a Cisco ASA Log Source. Now LogRhythm can process the log against a specific rule base.

Identify a Common Event

Processing the log against the appropriate rule base identifies the Common Event—a short, plain-language description of the log.

Assign a Classification

Based on the Common Event, LogRhythm can assign a classification which includes one of three major classifications–Operations, Audit, or Security–and a more specific sub-classification. Here are some examples:

- Audit: Authentication Failure

- Operations: Network Deny

- Security: Suspicious

Results for initial processing of our example log:

Log Source = Cisco ASA

Matched Rule = PIX-106014: Denied Packet

Common Event = Denied Packet

Classification = Operations: Network Deny

Identify Events

LogRhythm recognizes that some logs are more important to your organization's operation, security, and compliance than others. In the identification process, more important logs are designated as Events–generally this will be around 10% of your total log activity.

Metadata Processing

LogRhythm parses, calculates, and derives metadata from logs. The metadata fields go into a database to help speed performance when you use LogRhythm search tools such as Investigator, Personal Dashboard, and Reporting. Logs identified as Events and the associated metadata are also sent to LogMart unless specifically configured not to do so.

For a complete list of metadata fields, see the LogRhythm Schema Dictionary and Guide. Note that while all metadata fields are available in the Web Console, not all are available in the Client Console.

LogRhythm derived metadata fields store network and host information pulled from the log message.

Where space is restricted such as column headings, reports, and grids, i and o are used as abbreviations for Impacted and Origin. For example, Impacted Zone and Origin Zone may appear as iZone and oZone. Other metadata fields also use abbreviations or short names, in report column headings when space is restricted.

After additional processing, the following information is available for our example log:

Raw Log

08 13 2009 15:21:19 1.1.2.1 <LOC4:ERRR> Aug 13 2009 15:21:19 GSC-Internet-FW: %ASA-3-106014:

Deny inbound icmp src INSIDE:4.2.1.3 dst INSIDE:4.1.1.1 (type 0, code 0)

Log Processed Information:

Log Source = Cisco ASA

Matched Rule = PIX-106014: Denied Packet

Common Event = Denied Packet

Classification = Operations/Network Deny

Metadata:

Vendor Message ID = %ASA-4-106014

Protocol = ICMP

Origin Host = 4.2.1.3

Impacted Host = 4.1.1.1

Windows Event Log Example

Raw Windows Event Log

8/20/2008 11:17 AM TYPE=SuccessAudit USER=NT AUTHORITY\SYSTEM COMP=ServerOne SORC=Security CATG=Logon/Logoff EVID=540 MESG=Successful Network Logon: User Name: john.smith Domain:LEARNING Logon ID:(0x0,0x18DF 15 ) Logon Type:3 Logon Process:Kerberos Authentication Package: Kerberos Workstation Name:Logon GUID:{06cc19fa-e96f-81ba-9ccb-17f0a4319ff4} Caller User Name:- Caller Domain:- Caller Logon ID:- Caller Process ID: - Transited Services: - Source Network Address: 10.1.1.100 Source Port:2678

Windows Event Log Processed Information

Log Source = Microsoft Event Log for XP/2000/2003 – Security

Matched Rule = EVID 540: Successful Logon

Common Event = Network Authentication - Admin

Classification = Audit / Authentication Success

Metadata Fields:

Vendor Message ID = 540

Origin Host = 10.1.1.100

Domain = Learning

Session = 0x0,0x18DF15

Process = Kerberos

Login = john.smith

Flat File Log Example

Raw Flat File Log

917322,2009-01-26 15:07:33.250,516,"POS1050.pcommerce.local","192.168.1.100","W","Alert","File access control","TESTMODE: The process '<remote application>' (as user SFORCE\Stuart.Young) attempted to access 'C:\Iris\Bin\RestartSVS.bat'. The attempted access was a write (operation = OPEN/CREATE). The operation would have been denied.","FACL_DENY_TESTMODE","RestartSVS.bat","<remote application >",,,,,867,"Remote applications, read/write all files (file access via network share)",122,"Microsoft SQL Server",," SFORCE\Stuart.Young"

Flat File Processed Information

Log Source = Flat File – Cisco Security Agent

Matched Rule = FACL_DENY_TESTMODE: Alert

Common Event: File Access Denied

Classification = Audit / Access Failure

Metadata Fields:

Vendor Message ID = FACL_DENY_TESTMODE

Origin Login = Stuart.Young

Domain = SFORCE

Process = remote application

Object = RestartSVS.bat

UDLA Log Example

Raw UDLA Log

DATE_TIME=1/15/2009 8:40:12 AM DURATION=0 DISPOSITION_CODE=1026 CATEGORY=9 FILE_TYPE_ID= HITS=1 BYTES_SENT=8503 BYTES_RECEIVED=4712 RECORD_NUMBER=500000024145 USER_ID=2498 SOURCE_IP=2.8.3.5 SOURCE_SERVER_IP=1.1.1.1 DESTINATION_IP=3.1.6.3 PORT=80 PROTOCOL_ID=1 URL=Host126 FULL_URL=http://Host126/b/ss/seatimesseattletimescom,seatimesglobalprod/1/= KEYWORD_ID=

UDLA Log Processed Information

Log Source = UDLA – WebSense

Matched Rule = EVID 1026: URL Access Allowed

Common Event = URL Access Allowed

Classification: Audit / Access Success

Metadata Fields:

Vendor Message ID = 1026

Origin Host = 2.8.3.5

Impacted Host = 3.1.6.3

Impacted Port = 80

URL = http://Host126/b/ss/seatimesseattletimescom,seatimesglobalprod/1/

Bytes In = 4712

Bytes Out = 8503

Log Message Classifications

LogRhythm uses classifications to group similar log messages into logical containers. These classifications provide organization to vast amounts of log data, making it easier to sort through and understand. Classifications fall under three main categories: